Recently Published by Autodesk Researchers

Autodesk Research teams regularly contribute to peer-reviewed scientific journals and present at conferences around the world. Check out some recent publications from Autodesk Researchers.

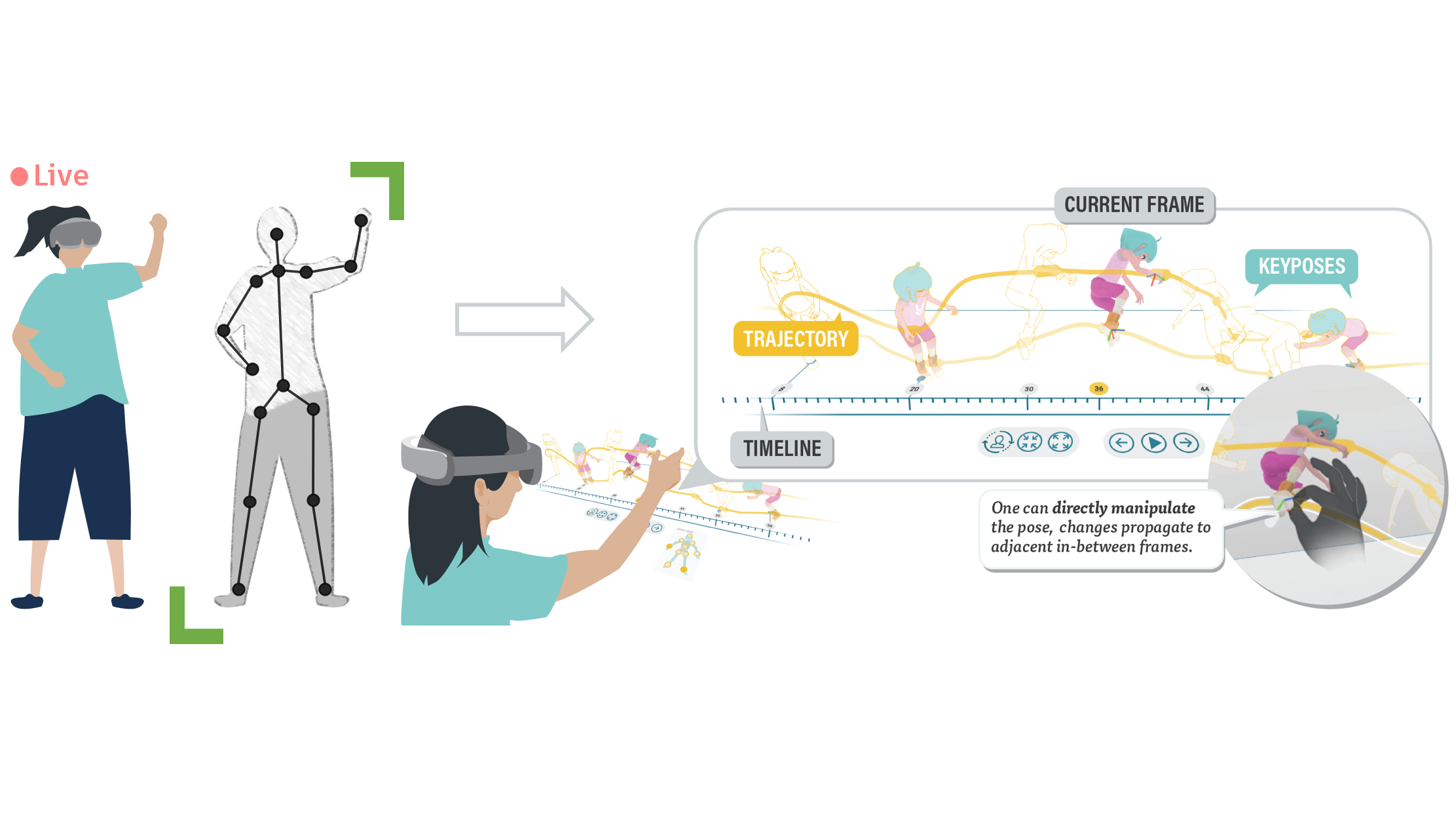

Editing character motion in Virtual Reality requires working with both spatial and temporal data using controls with multiple degrees-of-freedom. The spatial and temporal controls are separated, making it difficult to adjust poses over time and predict the effects across adjacent frames. To address this challenge, the team introduced TimeTunnel, an immersive motion editing interface that integrates spatial and temporal control for 3D character animation in VR. TimeTunnel provides an approachable editing experience via keyposes, a set of representative poses automatically computed to concisely depict motion, and trajectories, 3D animation curves that pass through the joints of keyposes to represent in-betweens. TimeTunnel integrates spatial and temporal control by superimposing trajectories and keyposes onto a 3D character.

TimeTunnel Live: Recording and Editing Character Motion in Virtual Reality

TimeTunnel Live is an animation authoring interface for recording and editing motion in Virtual Reality that leverages the state-of-the-art tracking to capture a user’s body motion, facial expressions, and hand gestures. To facilitate editing captured motion, the team offers an immersive motion editing interface that integrates spatial and temporal control for character animation. The system works by extracting keyposes from the 3D character animation and superimposing them along a timeline. The joints across the keyposes are connected through 3D trajectories to show a character’s movement. This system provides an interactive experience to explore the future of immersive animation technologies.

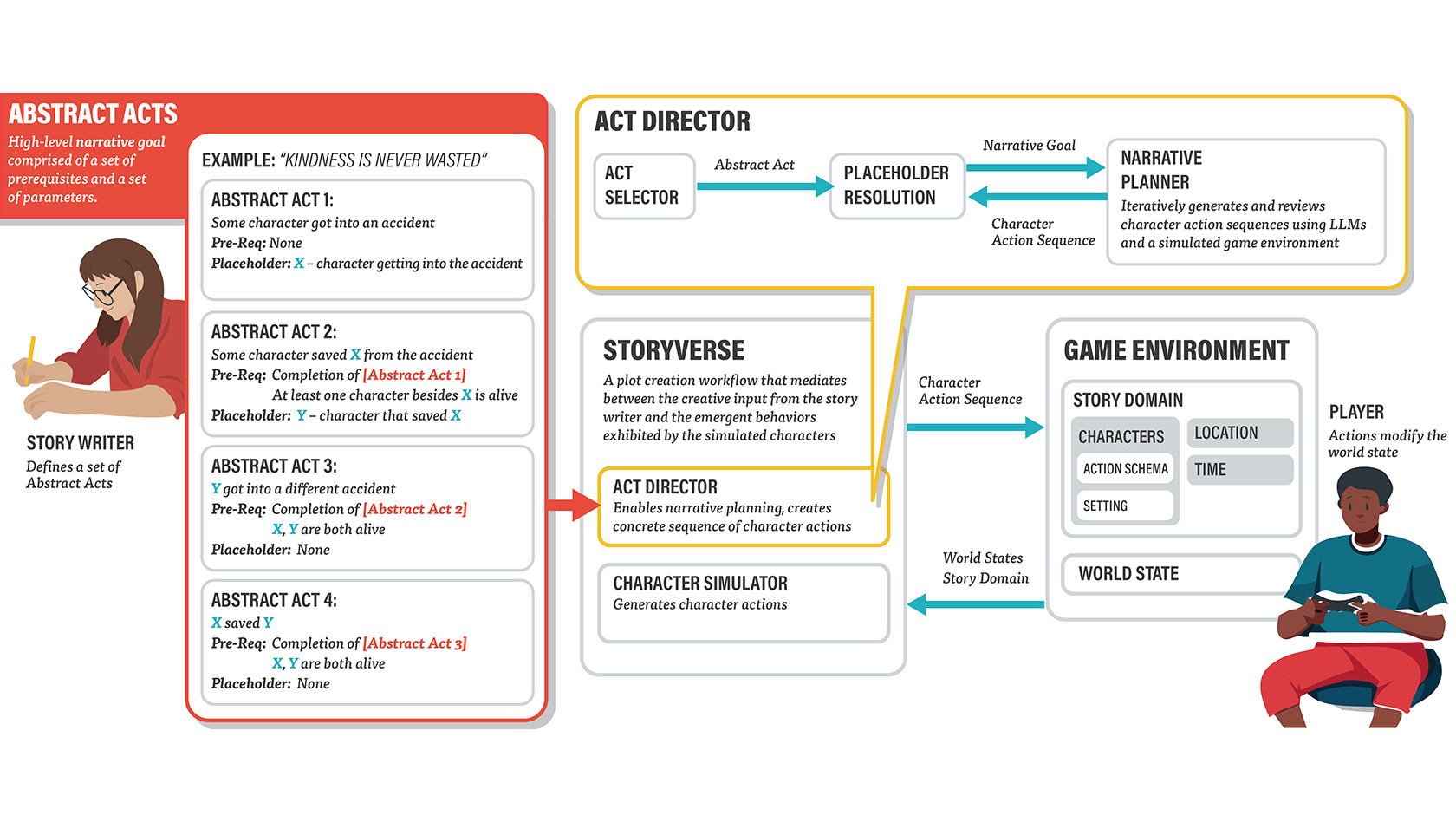

Automated plot generation for games enhances the player’s experience by providing rich and immersive narrative experience that adapts to the player’s actions. Traditional approaches adopt a symbolic narrative planning method, which limits the scale and complexity of the generated plot by requiring extensive knowledge engineering work. Recent advancements use Large Language Models (LLMs) to drive the behavior of virtual characters, allowing plots to emerge from interactions between characters and their environments. However, the emergent nature of such decentralized plot generation makes it difficult for authors to direct plot progression. In this paper, the team proposes a novel plot creation workflow that mediates between a writer’s authorial intent and the emergent behaviors from LLM-driven character simulation, through a novel authorial structure called “abstract acts.” The process creates “living stories” that dynamically adapt to various game world states, resulting in narratives co-created by the author, character simulation, and player.

Get in touch

Have we piqued your interest? Get in touch if you’d like to learn more about Autodesk Research, our projects, people, and potential collaboration opportunities

Contact us