How Digital Twins Accelerate AI Model Deployment

Deploying AI models in the real world requires precision, reliability, and safety. Whether identifying components on a production line or guiding an autonomous system, models must be trained on diverse, high-quality data to ensure robust performance. However, obtaining real-world training data at the necessary scale and variety is expensive, time-consuming, and often infeasible.

Digital twin simulation offers a scalable solution, rapidly generating vast amounts of synthetic training data under controlled and customizable conditions. At Duality, we developed Falcon, our digital twin simulation platform, to bridge the gap between AI training needs and real-world deployment. In this blog we dive into how digital twins are accelerating AI model development, and how our work in the Autodesk Research Residency Program is increasing accessibility to this approach.

Training AI Models with Digital Twins

Projects pioneering novel technologies thrive when industry partners are engaged to test and provide essential feedback. During our residency, Autodesk Research’s Robotics Team proved to be an ideal collaborator for exploring new digital twin methodologies. The team’s work with computer vision aided robotic assembly opened the door for exploring and comparing the efficacy of digital twins created through traditional (3D mesh) and novel (gaussian splatting) 3D reconstruction techniques. In collaboration with the team, we experimented with applying digital twin simulation to a robotic assembly task: assembling a skateboard using two six-degree-of-freedom (6DOF) robotic arms. To perform this task, the robot’s AI vision system needs to demonstrate reliable performance with respect to:

- Object detection: Identify components the robot must pick up.

- Pose Estimation: Determine the orientation of each component for accurate manipulation via the arm’s end effector.

- Robustness: Operate reliably under varying lighting and work area clutter conditions.

The Challenge: Training the vision model requires creating thousands of annotated images of all components, in diverse positions, arrangements, lighting conditions, degrees of visibility and clutter, and more. Getting all these images in the real world is tedious, difficult, time consuming, and error prone — with no guarantee of getting all of the variety that we need for reliable performance results.

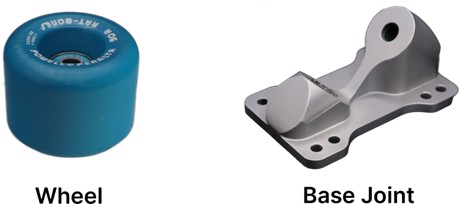

The Solution: Digital twins drastically simplify training data generation. By replicating the work environment (Fig 1) and skateboard components virtually (Fig 2), we can introduce controlled variations and capture synthetic images using a virtual camera sensor. Since Falcon knows the location of every object in the scenario, it can carry out automated annotations and provide precise object location and orientation data. The model is then tested with novel real-world images, and if model performance is lacking, we can iterate the simulation scenario to refine training data until optimal performance is achieved.

Fig 2. Example of some of the parts required for this project – skateboard wheels and base joints. Each part that must be recognized by the vision system, required creation of its digital twin.

Building a Digital Twin Ecosystem for Scale

New technologies often face ecosystem barriers. In this case, adoption of digital twin simulation depends on access to high-quality digital twins. How can users obtain the digital twin they require at scale? Falcon supports digital twin creation from multiple sources, including:

- CAD file imports from Autodesk Fusion.

- 3D assets from technical artists.

- Geospatial data (e.g., satellite imagery and elevation surveys).

- Real-world object scanning with a custom-built digital twin rig, designed and built by Duality at the Autodesk Research Residency Program.

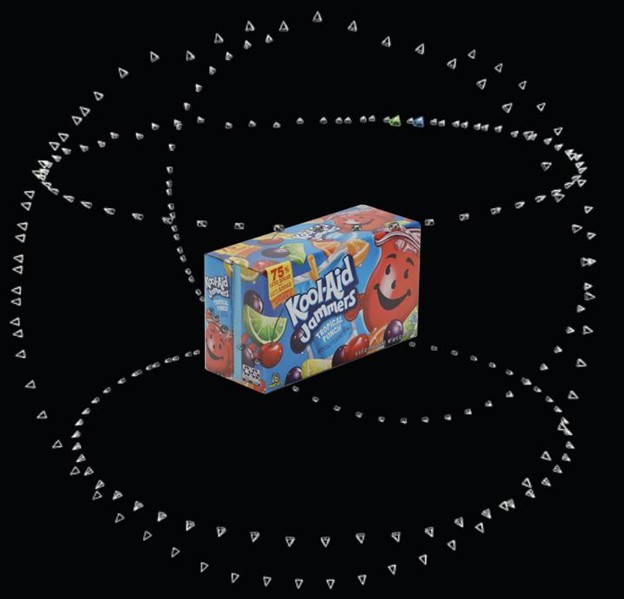

Our scanning rig captures objects up to a cubic meter in size within minutes (Fig 3, video). It employs a transparent turntable and an arm-mounted camera to generate images from all angles.

Fig 3. This image shows all the locations from which an image of the box of juice packets was taken to create a digital twin of that box.

Our automated processing extracts object masks and reconstructs 3D models using photogrammetry. Manual input is limited solely to non-visual information and metadata. This can be intrinsic values like mass, descriptive ones like brand, or even behaviors in the form of programmed scripts.

Video showing a sample scan of a product, along with the resulting digital twin.

The design and construction, as well as the iteration from concept to production-ready version of the digital twin rig was made possible by our participation in the Autodesk Research Residency Program. 3D Modeling and CAD work were carried out using Autodesk Fusion and Revit, while the construction of the rig was made possible by the access to, and guidance from, the Pier 9 Autodesk Technology Center Workshop.

Closing the Sim2Real Gap

A key challenge in leveraging digital twin-generated synthetic data for AI training is ensuring that models trained in simulation generalize effectively to real-world conditions. This requires a structured, quantifiable approach for evaluating the quality and effectiveness of synthetic data. At Duality, we apply the 3I’s Framework — Indistinguishability, Information Richness, and Intentionality — to systematically evaluate and refine our synthetic data*.

- Indistinguishability ensures that synthetic data closely mirrors real-world data, minimizing visual and statistical differences that could hinder model performance.

- Information Richness guarantees that the dataset is diverse, encompassing a wide range of lighting conditions, occlusions, and clutter variations to improve model robustness.

- Intentionality focuses on tailoring the synthetic data to the specific robotic tasks, ensuring that it captures the necessary variations and edge cases.

By rigorously evaluating synthetic data using the above criteria and testing the vision model performance on novel real-world data, we can quickly and iteratively refine our training data until required model performance is achieved. This structured approach enables more reliable Sim2Real transfer, ensuring that our AI models trained in Falcon’s simulation environment perform effectively when deployed in the real world.

*Note: A deeper exploration of the 3I’s Framework can be found here.

Advancing Digital Twin Creation: 3D Meshes vs. Gaussian Splatting

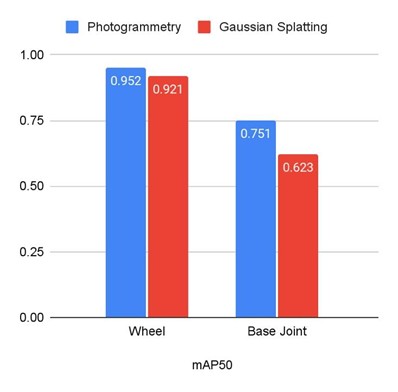

The previous sim2real gap section motivated us to look at the best rendering approach for synthetic data by comparing the established mesh-based rendering technique with recent advances in gaussian splatting. Initially, our rig produced only photogrammetry-derived 3D meshes. However, our recent work with Autodesk Research Robotics explored an alternative: Gaussian Splatting, which reconstructs objects faster and using fewer images. For robotic applications, where spatial orientation is critical, efficient 3D reconstruction can be a highly valuable feature.

Comparing the Techniques

While there are many significant differences and nuances, for our use its relevant to note:

- Gaussian Splatting excels at reconstructing smooth or featureless surfaces and produces more accurate digital twins when dealing with incomplete data.

- 3D Meshes via Photogrammetry easily enable adjustable lighting in simulation, whereas Gaussian Splatting currently embeds lighting into the model, limiting our ability to customize conditions.

Fig 4. Examples of skateboard parts digitally reconstructed via photogrammetry and Gaussian splatting.

To quantify the comparison, we carried out an end-to-end test by generating digital twins of skateboard parts using each technique, and then training an object detection model with synthetic data derived from each type of digital twin. Though the gaussian splat derived digital twins yielded slightly lower object detection performance overall, the test confirmed that this technique could lead to more robust and performant digital twin capture in the near futrure (Fig. 6).

Fig 6. Comparing object detection performance of a computer vision model trained with synthetic data generated from photogrammetry and gaussian splatting-based digital twins.

Conclusion

As digital twin simulation increasingly supports and accelerates advanced AI training, demand for rapid access to high-fidelity digital twins will continue to grow. The digital twin rig developed during the Autodesk Research Residency Program has already empowered customers to efficiently generate extensive datasets for training computer vision models. Our collaboration with the Autodesk Research Robotics Team—specifically investigating Gaussian splats for digital twin creation—demonstrates significant potential for reducing image volume requirements, streamlining digital twin production, and substantially accelerating AI model training workflows. The support from the Autodesk Research Residency Program for proof-of-concept and early-stage development has been instrumental in fostering these industry-advancing initiatives, providing vital tools, unique resources, and a collaborative community at the Autodesk Technology Center in San Francisco.

Apurva Shah is CEO, Duality AI, Felipe Mejia is a Senior AI/ML Engineer at Duality AI, Sudhansh Peddabomma was a summer 2024 Intern at Duality AI, and Mish Sukharev is Communications Manager at Duality AI.

Get in touch

Have we piqued your interest? Get in touch if you’d like to learn more about Autodesk Research, our projects, people, and potential collaboration opportunities

Contact us