The Problem of Generative Parroting: Navigating Toward Responsible AI (Part 1)

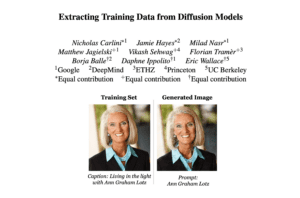

The advent of generative AI models like Stable Diffusion, DALLE, and GPT has revolutionized content creation across various domains. Despite their transformative impact, their application in trust-critical scenarios remains constrained. This limitation arises from a common challenge in deep learning models: overfitting to training data, leading to the generation of nearly identical replicas during inference which we call “data parroting.” In this series of blog posts, I delve into the critical challenge of data parroting in generative AI models from a trust and technical perspective. In part one, I explore the customer trust concerns of parroting. Our aim is to shed light on the importance of addressing data parroting for ensuring the responsible deployment of generative models in trust-sensitive applications, ultimately safeguarding customer data and creativity, and fostering trust in AI technology.

Data parroting poses significant concerns for customer trust for several reasons. One trust concern is the possibility that sensitive information – whether the information is personally identifiable information or confidential information – could be exposed by the parroted results to unintended recipients. Another trust concern is the user’s confidence that the results will be sufficiently creative and original to be free of liability such as copyright infringement.

The issue of data-parroting/memorization not only affects models like Stable Diffusion, DALLE, and GPT but could also potentially impact most generative models despite any architectural differences. Data parroting distinguishes itself from other challenges encountered in generative model training, such as mode dropping and collapse. The identification of individual parroted samples remains a challenge as parroting can be small/local but still important. As an example, a small logo (only a few pixels) parroted in a generated image would not be straightforward to detect with common representation learning techniques. Also, certain objects must be generated exactly as they are, for example, stop signs on streets. When we have a dataset consisting of a mix of samples, where some samples can be directly copied (parroted) and others cannot, it poses a challenge.

Parroting encompasses two trust concerns: data exposure and copyright infringement. Recognizing the distinctions between these two examples of trust concerns is essential for formulating precise strategies aimed at addressing parroting across various levels of granularity, a task that often necessitates input from domain and legal experts. It is important to acknowledge that, even in cases where an occurrence does not expose sensitive data or is not deemed copyright infringement, the possibility that a separate occurrence of parroting could implicate either sensitive data exposure or copyright infringement can still undermine customer trust.

The legal landscape around generative AI is evolving, with ongoing debates on how to protect sensitive data (both personal data and confidential data) and intellectual property rights while fostering innovation. The intricate balance between parroting, or memorization, in generative models and either sensitive data exposure or copyright concerns is deeply intertwined with legal considerations, particularly considering the challenges of evaluating and mitigating memorization. Generative models, by their nature, can reproduce, interpolate, or remix training data to create new outputs. However, when these models inadvertently memorize and reproduce identifiable parts of training data without transformation, they risk the possibility that output will expose sensitive information or, in the case of copyright-protected training materials, infringing on copyright laws. This potential for problematic parroting is significant because traditional evaluation metrics for machine learning models do not directly address the extent of data memorization.

Ensuring that generative models respect legal rights without stifling their ability to learn and create requires a nuanced understanding of both the technical challenges of preventing memorization and the legal frameworks governing data protection and copyright. This highlights the necessity for novel approaches in model training, evaluation, and legal compliance to navigate the complex interplay between AI capabilities and law.

At the same time, the interplay between parroting and customer trust raises questions about the role of the user in mitigating legal concerns – particularly as to copyright infringement concerns. What is the role of the user in reviewing generative model outputs for potential copyright issues and making necessary modifications? This approach is not perhaps a complete solution, and we still need prevention mechanisms to minimize the risk of data exposure.

In the next post, we’ll explore the challenges of achieving complete avoidance of parroting, consider whether parroting can be quantified as a metric, and highlight some potential areas for research.

The information provided in this article is not authored by a legal professional and is not intended to constitute legal advice. All information provided is for general informational purposes only.

Saeid Asgari is a Principal Machine Learning Research Scientist at Autodesk Research. You can follow him on X (formerly known as Twitter) @saeid_asg and via his webpage.

Get in touch

Have we piqued your interest? Get in touch if you’d like to learn more about Autodesk Research, our projects, people, and potential collaboration opportunities

Contact us