Revolutionizing Water Management

Part One: AI Techniques for Pump Optimization in Water Distribution Networks

Water is a precious resource essential for sustaining life and supporting various economic activities. Effective management of water distribution networks plays a vital role in ensuring its availability and efficient utilization. One critical aspect of water management is optimizing the operation of pumps within distribution networks, which are responsible for transporting water from sources to consumers.

Autodesk AI Lab and Innovyze joined forces at TechX 2022 – Autodesk’s largest internal, employee-driven conference – to explore how to integrate reinforcement learning (RL) techniques into Innovyze’s products related to water distribution. In just a year, the research project advanced to implement the RL-based solution into the existing infrastructure, adopting a hybrid RL approach to ensure a seamless transition, reliable testing, and risk mitigation by combining the strengths of the established query-based model and the new RL solution.

Collaborating on our Research

Traditionally, pump optimization in water distribution networks has relied on static and rule-based control strategies. However, these approaches often fail to adapt to dynamic changes in demand, network conditions, and energy costs, resulting in suboptimal performance and wasted resources. To address these challenges and revolutionize water management, researchers and engineers are exploring RL techniques.

RL is a branch of machine learning that focuses on training agents to make sequential decisions by learning from interactions with an environment. By applying RL techniques to water distribution networks, it becomes possible to develop intelligent systems that dynamically optimize pump operations, leading to significant improvements in efficiency, energy consumption, and overall system performance.

From Research to Product

Last year, teams from Innovyze and Autodesk AI Lab shared their ideas. This collaboration sparked research work aimed at applying RL techniques to water distribution networks. Through meticulous experimentation and analysis, the team achieved promising results within one year and the feature is already integrated into the product.

Building upon the initial positive outcomes Innovyze and Autodesk Research uncovered during the first year of research, the project progressed towards the implementation phase. The teams refined and adapted the solution developed during the research phase for deployment in a production environment. By leveraging the insights gained from their research, the team successfully integrated the RL-based solution into the existing water distribution network infrastructure.

To ensure a seamless transition and maintain trust throughout the process, the project team made a strategic decision to adopt a hybrid RL approach. Recognizing the importance of trust and reliability, the team devised a plan to gradually integrate the RL-based solution into the existing infrastructure while leveraging the baseline query-based solution. By implementing the hybrid-RL approach, the team ensured a smooth and controlled transition from the established query-based model to the RL-based solution. This incremental approach allowed for thorough validation and testing at each stage, mitigating any potential risks or disruptions. It also provided a robust and reliable framework that combined the strengths of both approaches.

From Genetic Algorithm to Reinforcement Learning

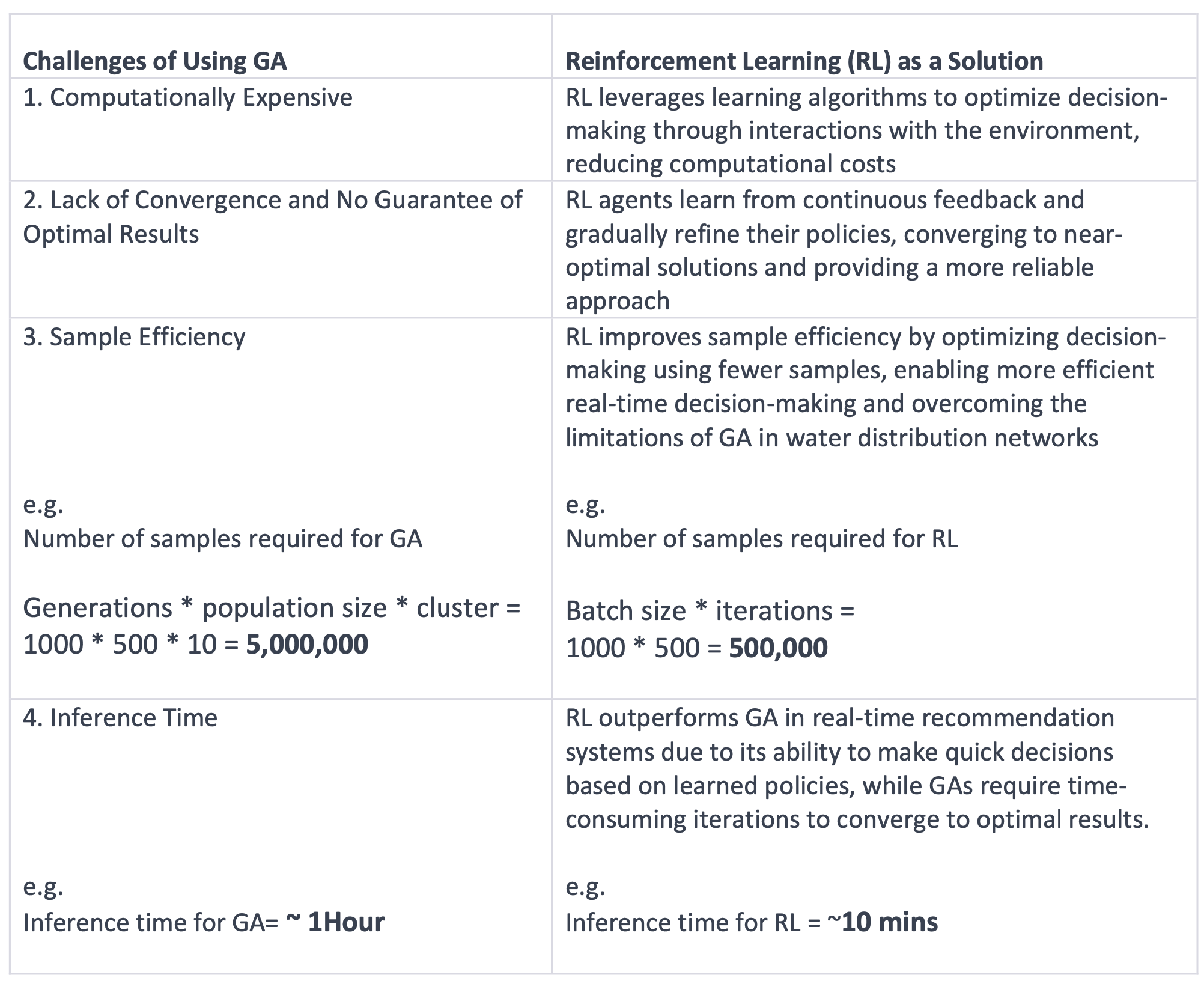

The following table provides a comprehensive comparison between Genetic Algorithms (GA) and RL in terms of their optimization techniques, convergence guarantee, computational efficiency, sample efficiency, and scalability and applicability.

Based on our research, RL surpasses GA in various aspects including computation efficiency, lack of convergence, absence of guaranteed optimal results, sample efficiency, and inference time.

RL Formulation

We frame our problem in the Markov Decision Process (MDP) which is a discrete-time stochastic control process for modeling decision making in situations where outcomes are partly random and partly under the control of a decision maker. The observation space includes tank levels, pumps status, forecasting of demand and energy tariff. In addition, there are three objectives we want to train the rewards contain tank level:

- Constraint Violation: Keep level under upper/lower constraints.

- Energy Savings: Energy optimization with respect to tariff structure.

- Toggle count: Keep count of change in control setpoints under threshold.

The Control horizon is being designed daily since the tariff and demand have clear daily and seasonal patterns, so the optimal solution should also follow the same daily frequency. The time interval for our control setpoints is 15 minutes, and each environment must finish 96 times control to provide the recommendation for next 24 hours.

In Part 2, we will show the promising results of our RL policy and how we deploy the RL policy into product.

Harsh Patel is a Principal Machine Learning Engineer, AEC

George Zhou is a Manager, Machine Learning, AEC

Ashley Wang is a Senior Machine Learning Engineer, AEC

Rodger Luo is a Principal AI Research Scientist, AI Lab at Autodesk Research

Get in touch

Have we piqued your interest? Get in touch if you’d like to learn more about Autodesk Research, our projects, people, and potential collaboration opportunities

Contact us