Encoding Experience: Conversations on Design, Emotion, and AI – Part Two

Exploring emotional sustainability in the built environment

Welcome back to our two-part blog post / lab report on Autodesk Research’s latest experiment: the initial Encoding Experience dinners. Make sure to check out part one if you haven’t already. With that, let’s get right to it!

Materials and Methods

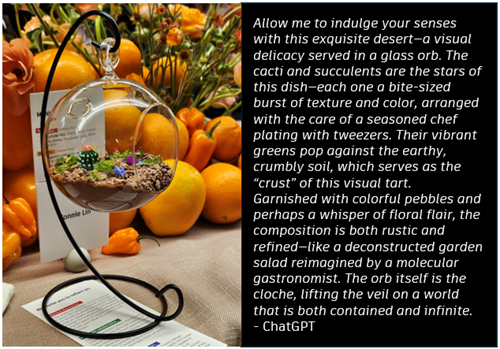

The unique spring-time Encoding Experience evenings took place in Boston and San Francisco. Attendees represented a carefully curated, rich mosaic of expertise, including architects, neuroscientists, environmental psychologists, public health scientists, computer scientists, urban data experts, and researchers in human-computer interaction. Stepping into the transformed office spaces adjoining our Technology Centers, guests began the evening with cocktails – in Boston mingling amongst striking “bouquets sous-vide” (Figure 1a) – and interacted with a generative AI shape-to-building prototype. As the evening flowed, we got settled at the serpentine tables to dive into an ambitious, roughly 15-person strong, single-thread conversation guided by a series of prompts:

Fueled by culinary delights, like the showstopping dessert featuring edible tiny gardens in suspended globes that had everyone reaching for their phones (Figure 1b), we embarked on a collective journey, exploring a new frontier in design. Microphones, hidden in the scenery, captured every spoken thought – with full knowledge of the participants, of course!

Figures 1a, b: Experiential elements that transformed our conversational scenery, enhancing thoughts and flow. Associated descriptions are results of an AI analysis that left authors of this report in tears. Included here for the discerning readers’ amusement.

Results and Discussion

- Conversational focus

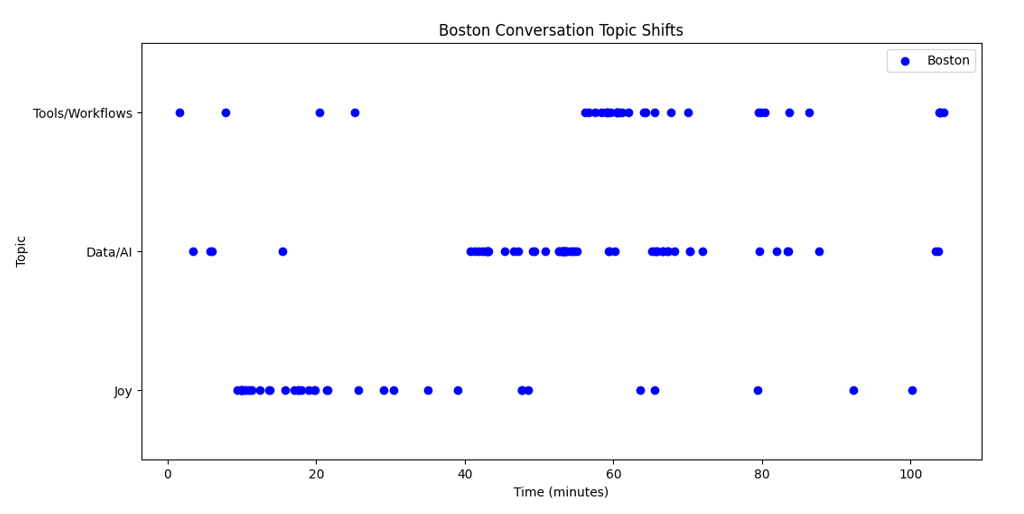

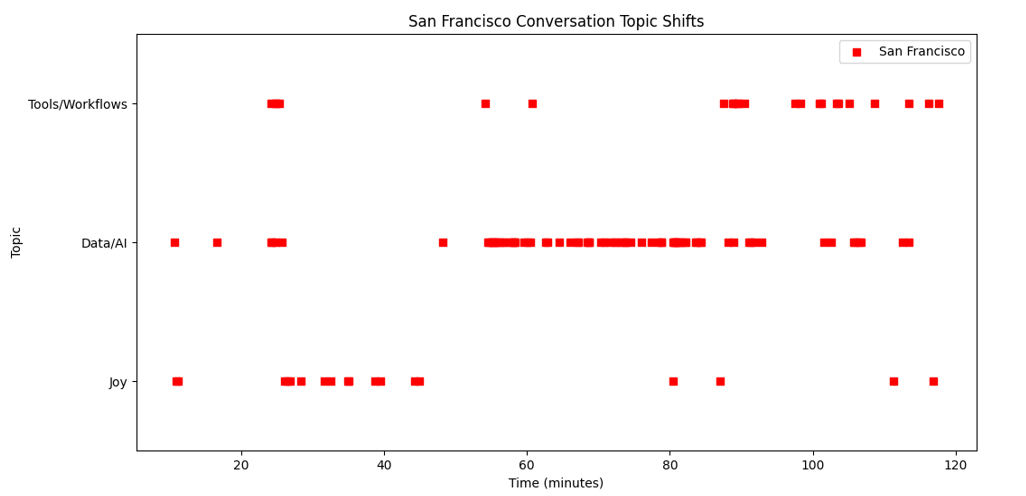

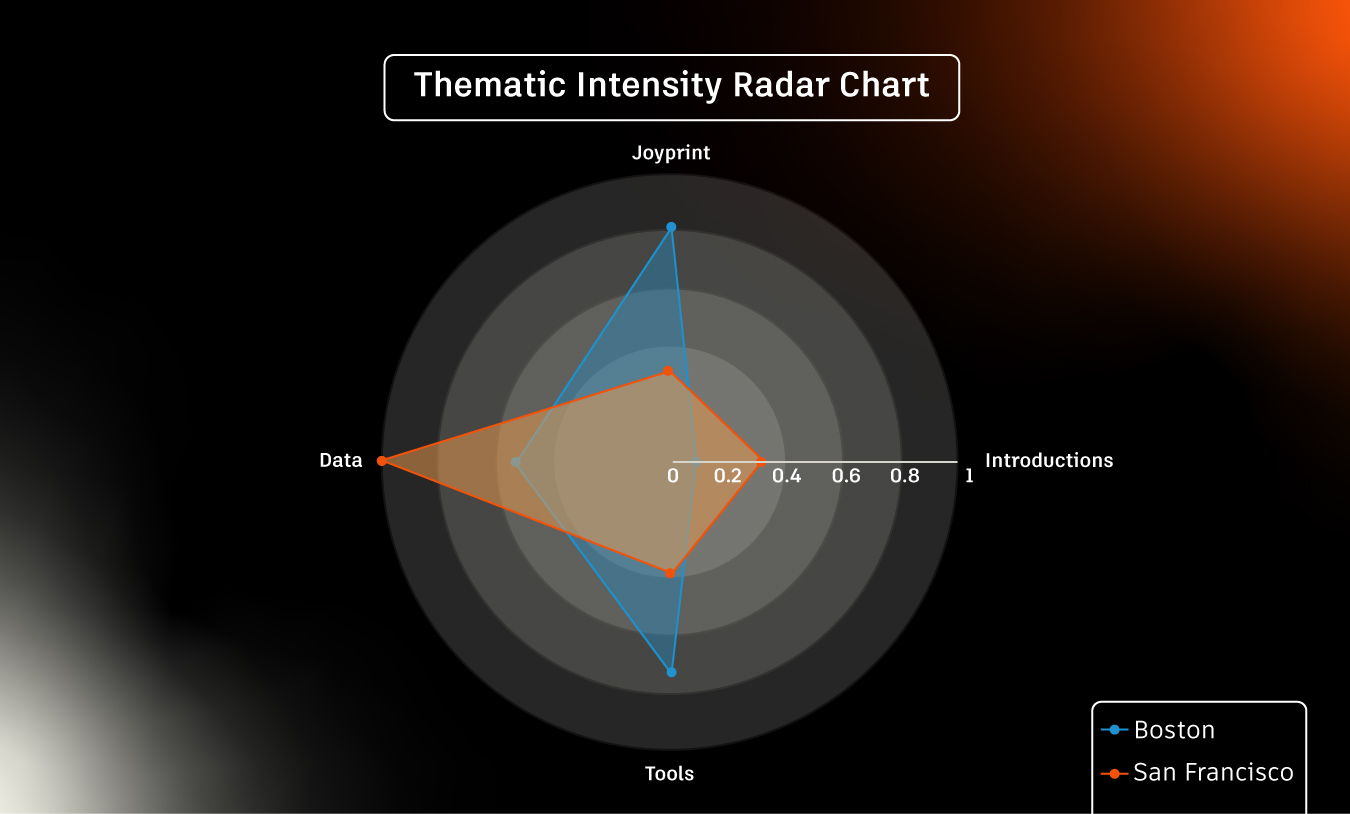

The conversations meandered in their own unique ways, with the group in Boston spending 49% more time on the first, joyprint prompt and 82% more time on the third, tools and workflows prompt. Meanwhile, San Francisco moved on more swiftly, but found itself fascinated with the second, data-related prompt, spending 179% more time on that discussion than the Boston attendees. That said, perhaps due to the guests having knowledge of all prompts apriori, concepts related to other prompts were interwoven within the three broad sections of discussion. Therefore, we also looked at sets of keywords related to each prompt throughout the whole conversation. Figure 2 highlights their appearances over the duration of dinners in Boston and San Francisco respectively. Figure 3 synthesises the data of time spent on sections of the conversation and the frequency of topical keywords to provide a more weighted view on the focus of each dinner.

Figure 3: A synthesis of time spent on sections of the conversation and the frequency of topical keywords at the two dinners respectively.

- Emotional analysis

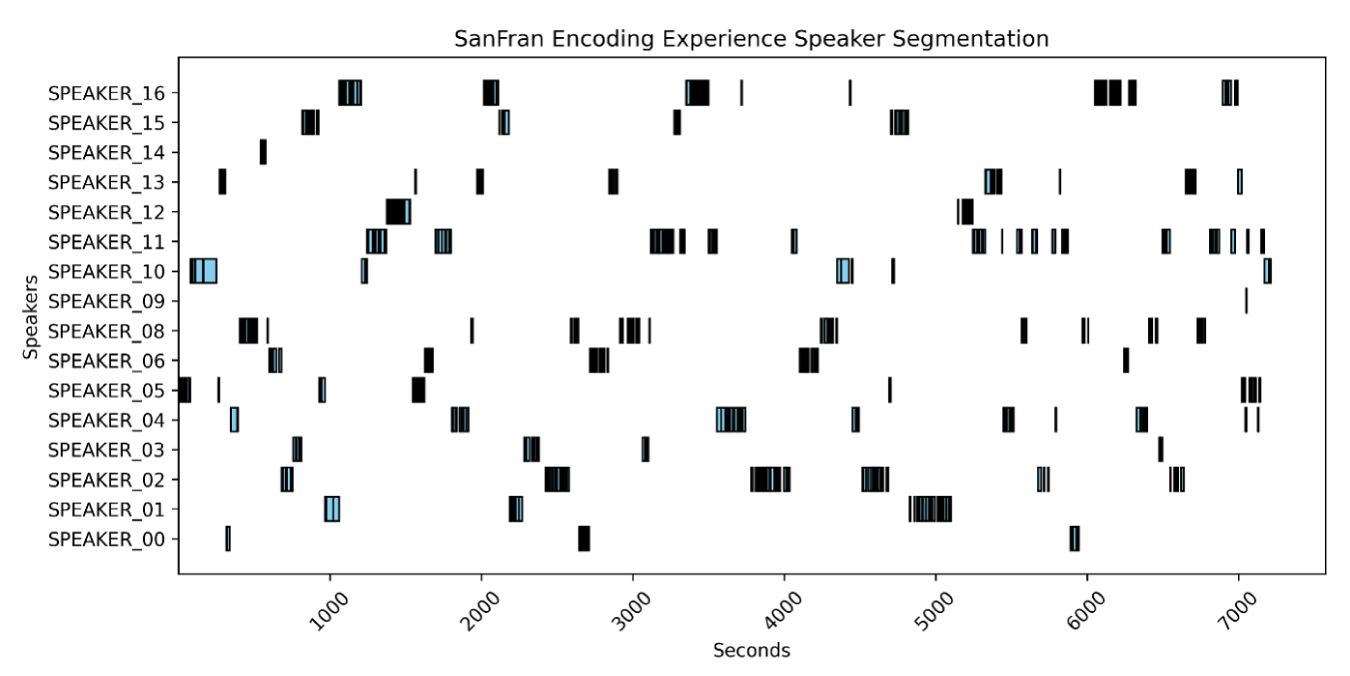

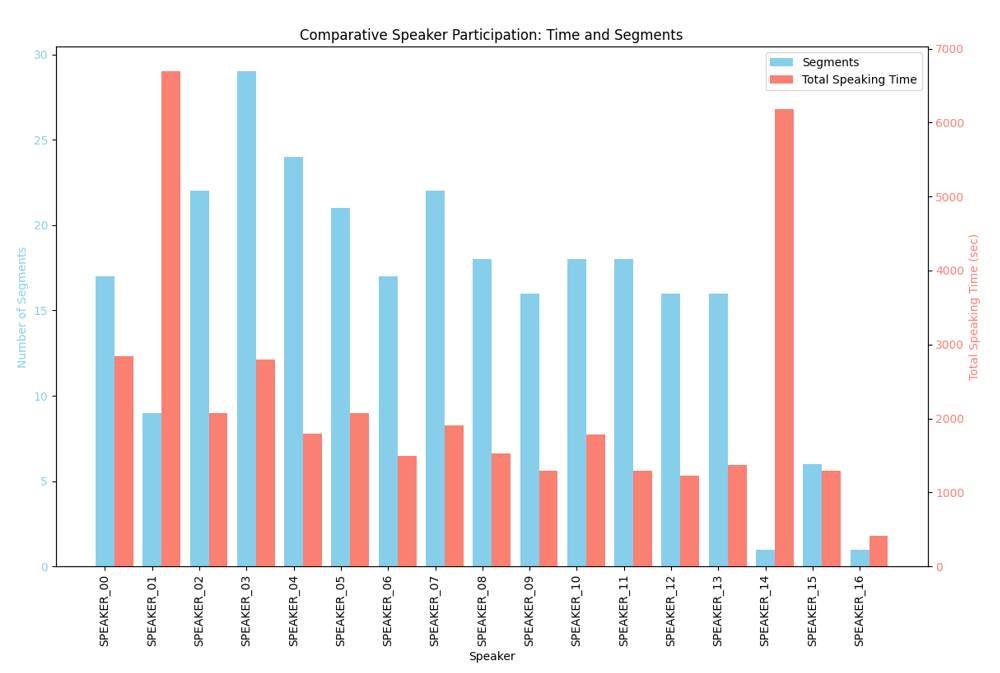

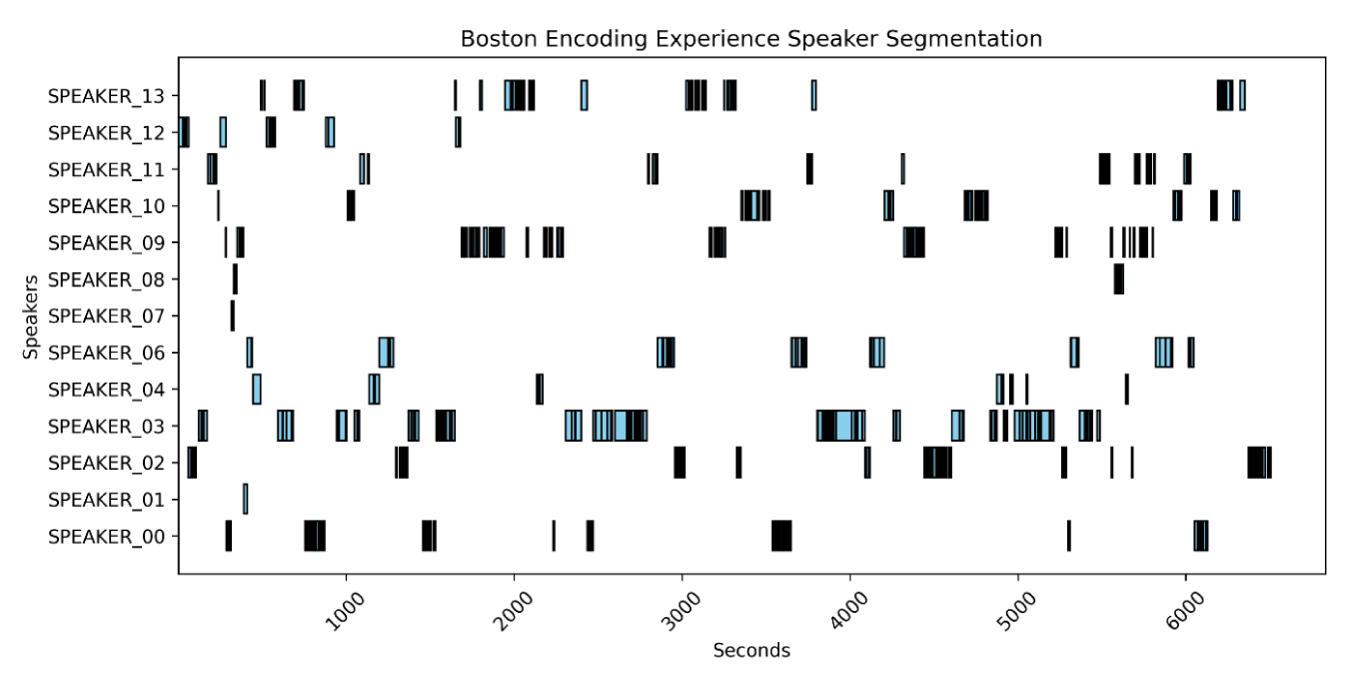

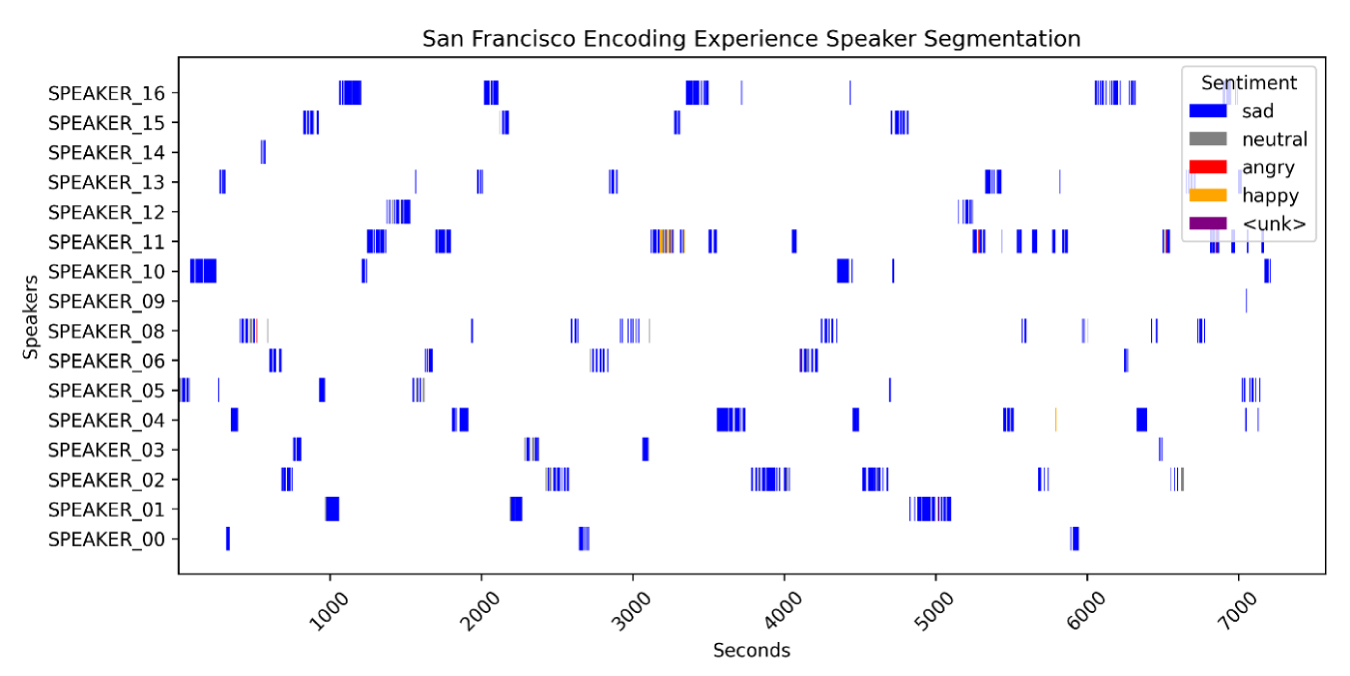

We performed emotional analyses of the conversations using various strategies. First, we processed the audio to increase the volume, reduce background noise (including the clattering of plates and utensils), and enhance the clarity of the voices. Using speaker diarization software, we identified the times at which guests spoke throughout the evening (Figure 4), which enabled us to determine the emotional nuances expressed by each speaker. For efficiency and data clarity, we limited our analysis to speaker interjections of greater than 5 seconds.

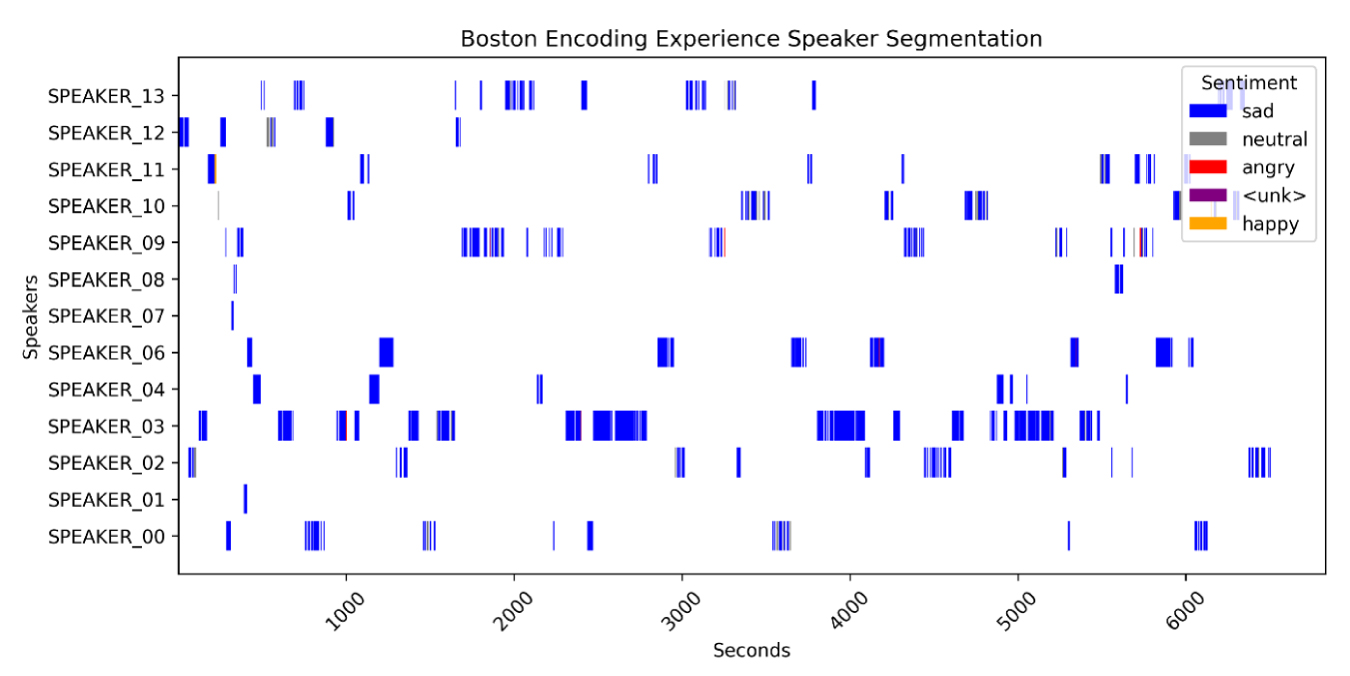

Having pre-processed the data, we applied three distinct emotional analysis methods. Our first approach employed an AI model specifically trained to determine emotion directly from audio files. This approach gave us the blues: as the results show (Figure 5), a surprising number of conversations were classified as “sad,” which was contrary to our perception of the overall mood and atmosphere at the dinners.

Figure 5: Sentiment analysis of speaking segments for Boston (top) and San Francisco (bottom) dinners using an AI model specifically trained to determine emotion directly from audio files.

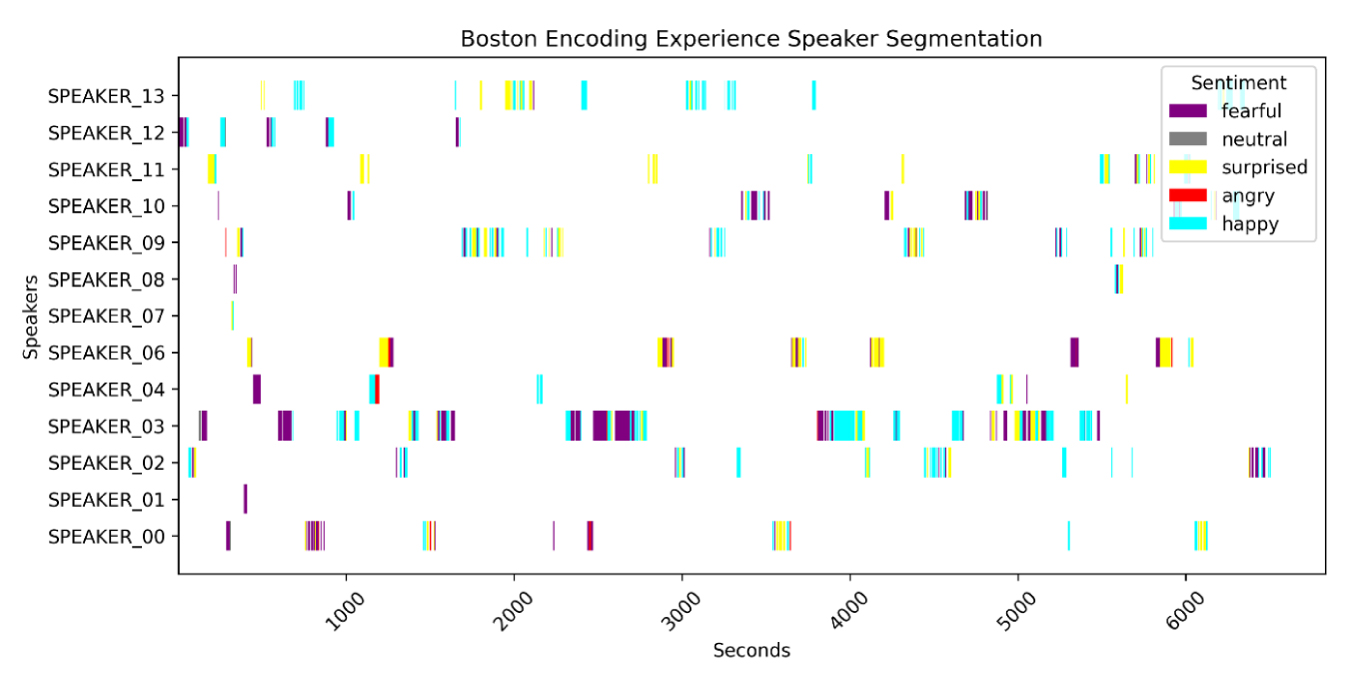

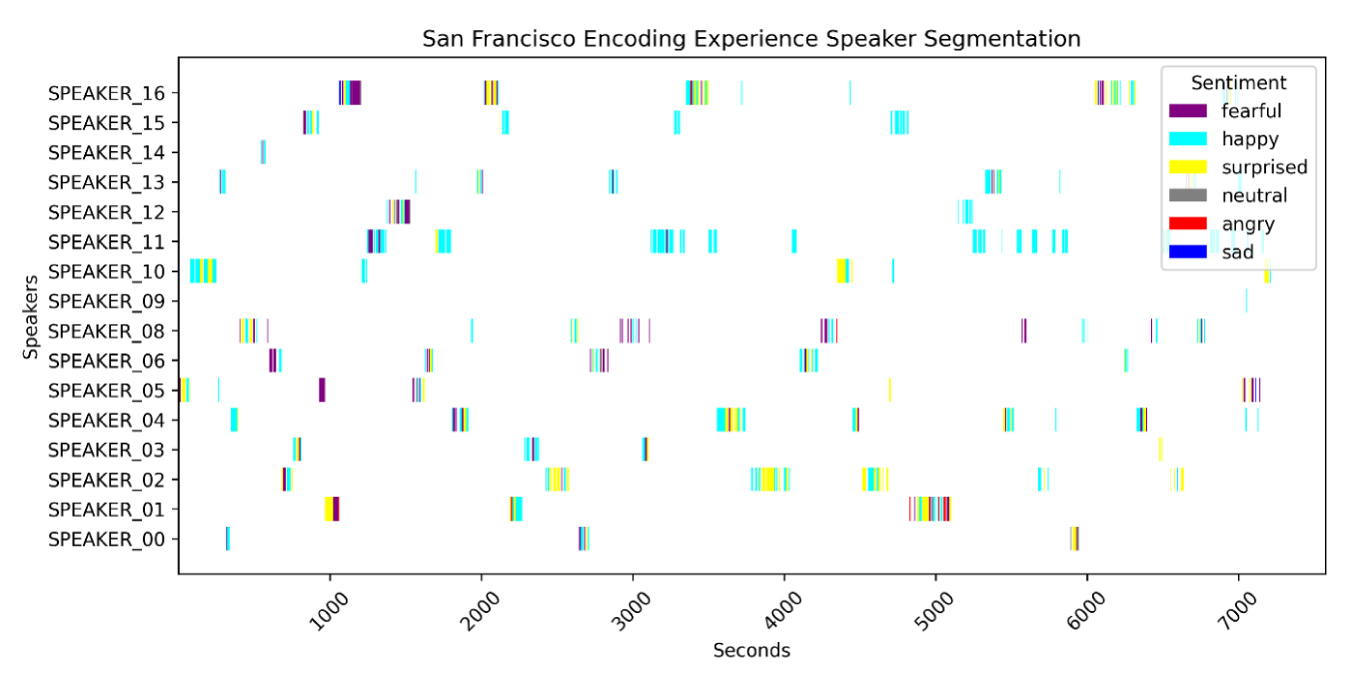

The next approach used a foundational audio to text model, fine-tuned to produce emotion labels. In plain English, this means an AI model for transcribing audio to text was retrained to output emotion labels instead of transcripts. This retraining approach benefits from leveraging audio features computed for generating transcriptions, retargeting them to recognize emotions instead. In this case, the algorithm produced a colorful rainbow of emotions, suggesting a happier mood compared to our previous results (Figure 6). However, this analysis was inconsistent with our experience, as the results displayed too many rapid shifts in emotional tone during the evening.

Figure 6: Sentiment analysis of speaking segments for Boston (top) and San Francisco (bottom) dinners using a foundational audio to text model, fine-tuned to produce emotion labels.

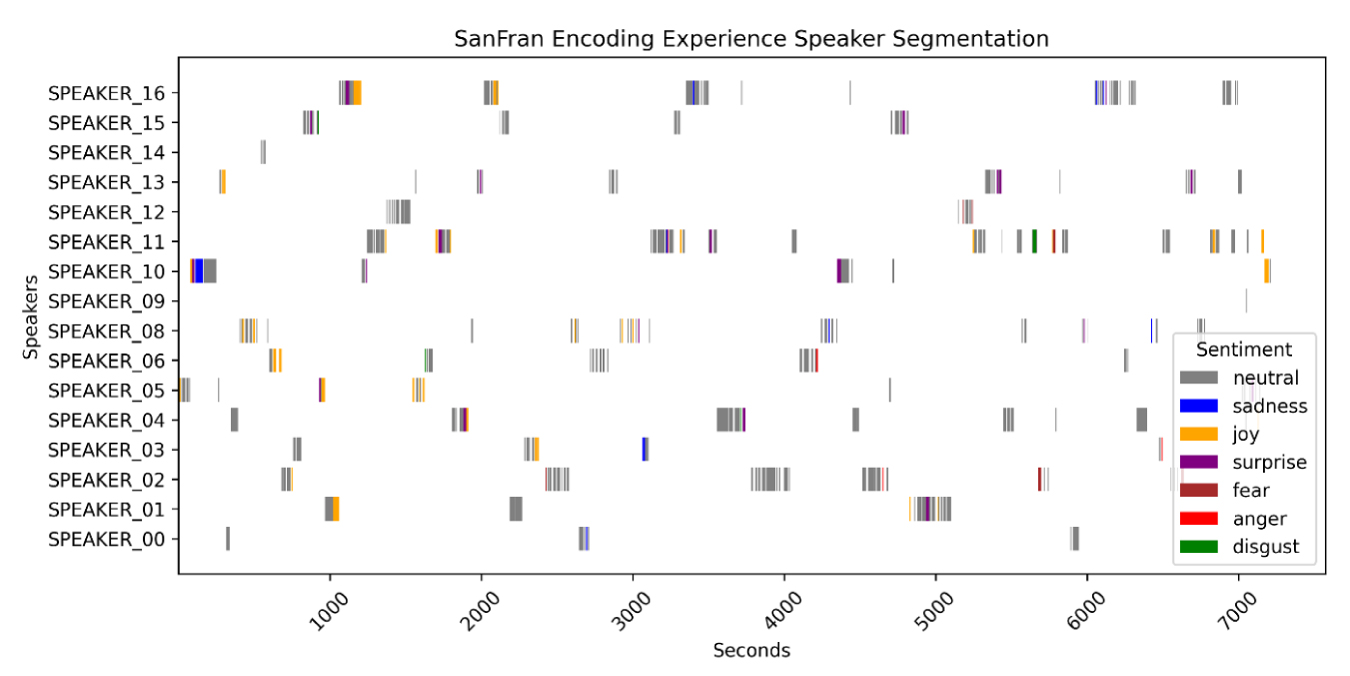

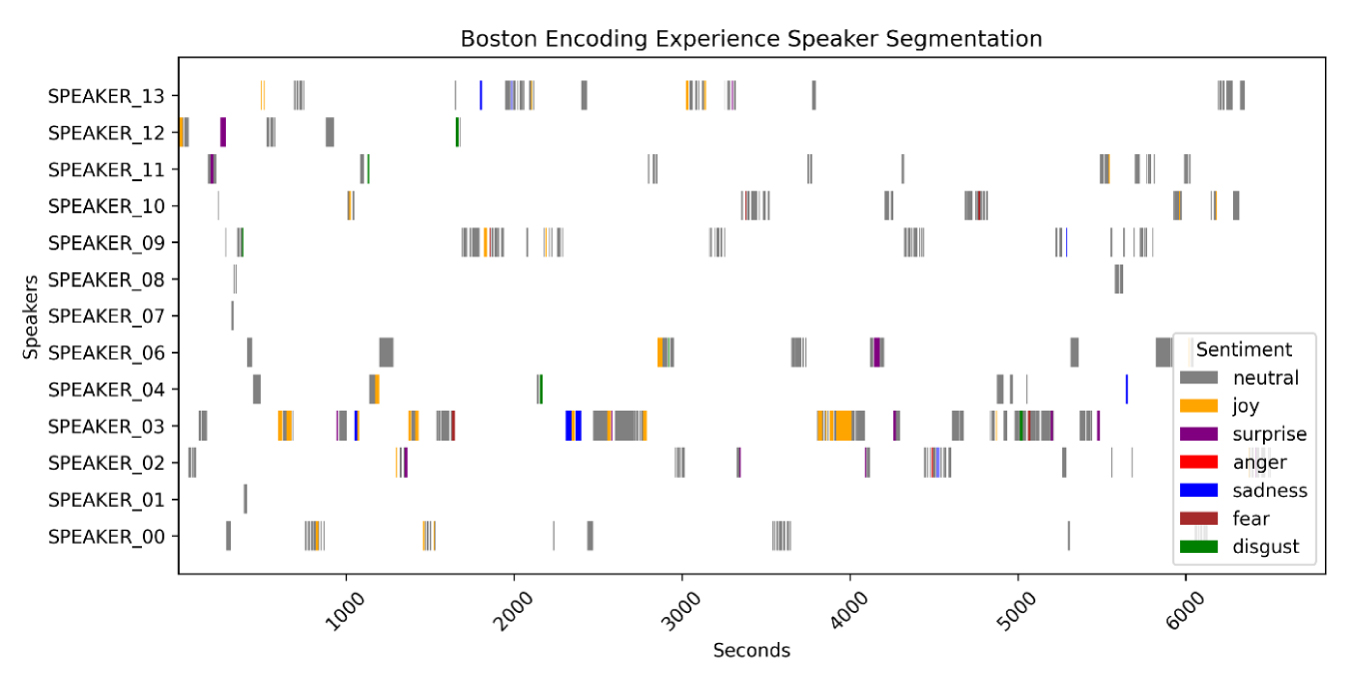

Finally, we performed an analysis based solely on audio transcripts, bypassing audio cues completely (Figure 7). Initially, we were reticent to apply this analysis as we hypothesized that voice tone would provide richer emotional context. However, a transcript-based approach would also provide an important a baseline to compare to the results of our other analyses. Contrary to our expectations, this method confirmed a predominantly neutral conversation, with sprinkles of emotional expression during the evening. These results provide a more authentic reflection of the emotional tone of the dinner conversations, which was mostly academic and composed with occasional lively exchanges from strongly held opinions and humorous moments.

- Speaker interaction

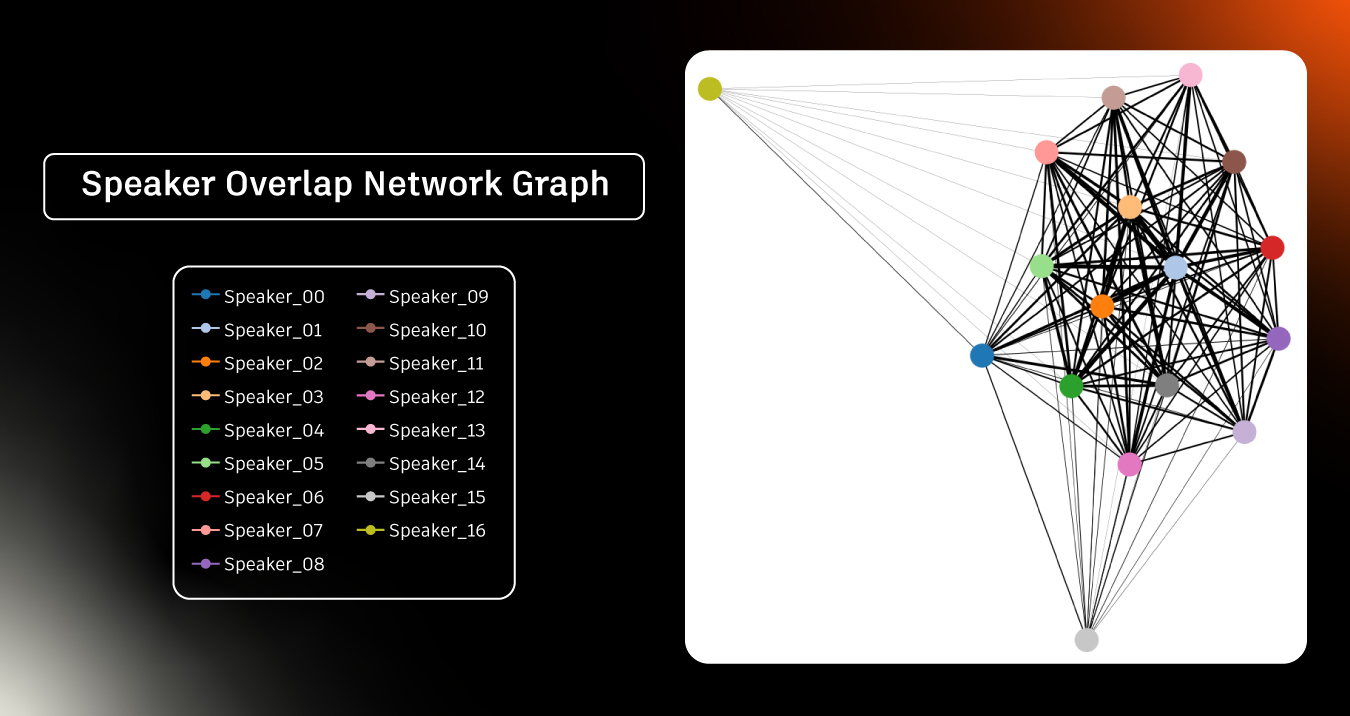

For this gastronome experiment to be considered successful, we wanted to observe a conversation that managed lively engagement of attendees of various backgrounds, not limited to a select few voices. To verify our gut-felt achievement of this, we asked an LLM to dissect the transcripts into utterances per attendee and then visualize interactions on a network diagram, starting with the Boston event. Figures 8 and 9 represent these hallucinations, respectively. We use the term “hallucinations”, as once we got over the magical feeling of getting an AI to go from our raw transcript to report-ready figures in a matter of minutes, we realized it had invented an extra speaker and decidedly inflated the speaking amounts of a few attendees, compared to our own (perhaps limited?) human memories of the evening. Further attempts at this analysis were abandoned and we satisfied ourselves with qualitative feedback from attendees to support our sense of achievement with regard to inclusivity of the evenings.

- Content summary

Finally, not discouraged by our previous AI-backed analysis hiccups, with the assistance of multiple LLMs we extracted key points raised throughout the discussions. Following careful human re-curation and validation, we present them confidently here, grouped along the axis of our three conversational prompts, and backed by illustrative quotes.

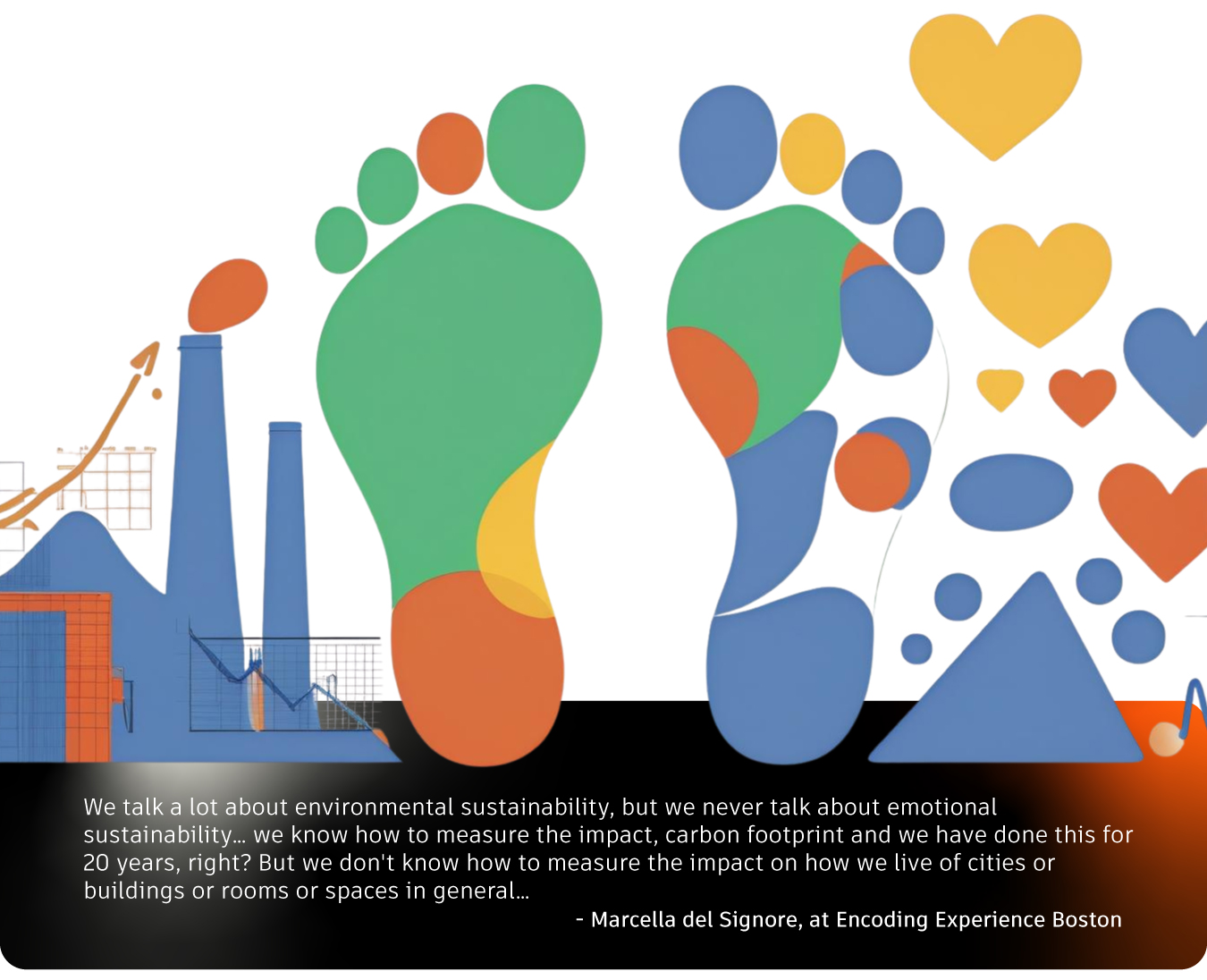

On Joyprint and finding the carbon equivalent to elevate human-centric design in practice

- The focus on efficiency and productivity in design, often driven by client demands and measurable outcomes, can overshadow the importance of well-being and experiential quality

- Leadership, advocacy, and potentially policy changes are needed to drive the adoption of human-centric design practices and post-occupancy evaluations.

- New business models could also help: a “subscription” for architecture, where architects remain involved after initial construction to adapt spaces to changing occupant requirements might add financial incentives.

- There is a growing recognition of the ethical obligation of designers to consider the impact of their work on human flourishing, but we should keep training the next generation of designers and engineers on these imperatives

- User participation and co-creation in shaping their environments, from small-scale interventions to broader authorship of public spaces, are linked to joy and engagement.

- The concept of “design justice” through the lens of emotion is introduced, emphasizing the need to design spaces that empower diverse groups of people and avoid biases.

- Measuring collective well-being is significantly more complex than measuring individual responses

- “Joy” and other emotional responses are subjective and context-dependent – not the most likely footprint-equivalent metric

On collaboration and data about human experience

- Sharing knowledge and data within the industry, rather than hoarding it as proprietary information, is necessary for advancing the field. The competitiveness of architecture hinders innovation.

- Neuroscience, environmental psychology, and other social sciences are key fields for informing human-centric design. There is a need to better integrate data from these fields into architectural design processes.

- Current research methods are insufficient for capturing the nuances of human experience

- Aside from interest in exploring physiological and subconscious measures of response, desire exists to do more with qualitative methods, storytelling, anecdotal evidence

- Experimental studies are important for calibrating tools and generating rigorous data, however, frequently have limited sample sizes and niche questions.

- Longitudinal data collection would be ideal, but is a major challenge

- Excitement is brewing about the potential of AI to process large datasets and provide new insights into human responses to the built environment

- Ensuring representation of diverse populations in data collection and design is a concern, particularly for marginalized groups like children, older adults, and individuals with disabilities

On industry workflows & tools

- Current architectural tools primarily focus on geometry and physical properties, lacking the capacity to simulate or predict affective or experiential outcomes.

- Interdisciplinary collaboration from the very beginning of projects is seen as key for addressing the complexity of human experience in design.

- AI offers the potential to assist designers by simulating human responses and providing “partners” or “assistants” in the design process

- The development of tools that make understanding human experience “second nature” within the design workflow is the “next frontier.”

- “Experiential programming” in the early design stages, focusing on intended feelings and experiences rather than just physical requirements, is a valuable approach.

- Concerns surfaced about the potential for technology to lead to homogenization, reduce creativity, and prioritize quantifiable metrics over the unpredictable and unexpected aspects of human experience. This could also inadvertently lead to widening existing disparities in access to positive environments.

Conclusion

Our Encoding Experience 2025 experiments show the power of multidisciplinary dinner gatherings. We are somewhat able to demonstrate that emotionally varied discussions were had, with good engagement of the various invited individuals. We observe regional differences in conversational focus, which may have been driven by specific interests of attendees or particular group dynamics. In the key points identified over the two discussions, a promising picture of a future in which architecture and urban design are more driven by human-centered needs emerges.

Our provocative prompt of finding the carbon footprint equivalent of a “joyprint” led to reflections on changes needed in industry to fortify the growing interest in new kinds of metrics for the built environment, focusing on health, wellbeing, diversity, equity and fulfilling human potential. At the same time, it was acknowledged that joy, a subjective, multi-faceted and context-dependent emotion was not likely to be “it.” The second conversational prompt had our dinner guests exploring the tensions of the need for industry collaboration to gather data about human experience with its desires to protect intellectual property. There was considerable enthusiasm, especially in San Francisco, expressed around the potential for AI to be the forcing function and the source of progress. Even if – as the attempts of this report show – the capabilities of analyzing experience may still be in need of refinement, with strong focus on hallucination protection. Crucially, the idea that a new set of human science disciplines ought to be welcomed into the design process received broad support. With the final dinner prompt inviting thoughts on the future of tools and workflows, it was recognized this will be the “next frontier” and extending existing approaches beyond geometry analysis and quantitative measures are key enablers. Concerns were raised about the potential to over-encode and negatively impact new designs by driving homogeneity and removal of moments of surprise and unexpectedness.

Satisfied with this experiment, we are expanding the conversation with a similar dinner experience in London, followed by focused online workshops. In the meantime, a number of our dinner attendees kindly discussed their work in more detail in the Encoding Experience Research Connection series. In the future, we hope to engage on a 10 questions paper to spur more research that will further human-centric design focus in industry, be it via development of new metrics, datasets, breakthroughs in understanding of human perception or, “simply” new workflows and tools for the AEC industry. We are also excited for anyone looking to collaborate in this space, particularly in the context of seeding human experience data ecosystems for academic study, to get in touch.

With great thanks to our attendees in:

Boston

Leighton Beaman, Cornell University; Jacky Bibliowicz, Autodesk Research; Erika Eitland, Perkins and Will; Katherine Flanigan, Carnegie Mellon University; Francisco Colom Jover, MASS Design; Zach Kron, Autodesk; Carlos Sandoval Olascoaga, Northeastern University; Suchi Reddy, Reddymade; Dennis Shelden, Rensselaer Polytechnic Institute; Marcella Del Signore, New York Institute of Technology; Matthew Spremulli, Autodesk Research; Margaret Tarampi, University of Hartford; Cleo Valentine, Harvard University; Ray Wang, Autodesk Research; Kean Walmsley, Autodesk Research.

San Francisco

Burçin Becerik, University of Southern California; Sarah Billington, Stanford University; Gail Brager, UC Berkeley; Eve Edelstein, Stanford University; Dianne Gault, Autodesk Research; Maryam Hosseini, UC Berkeley; Mike Haley, Autodesk Research; Anita Honkanen, Stanford University; Yuhao Kang, University of Texas, at Austin; David Kirsh, UC San Diego; Connie Lin, Corgan, Conscious LA; Blaine Merker, Gehl; Sam Omans, Autodesk; Eric Paulos, UC Berkeley; Kean Walmsley, Autodesk Research.

Get in touch

Have we piqued your interest? Get in touch if you’d like to learn more about Autodesk Research, our projects, people, and potential collaboration opportunities

Contact us